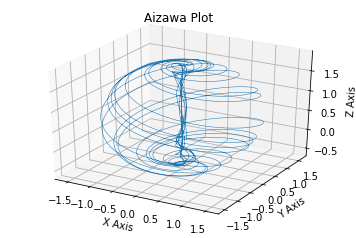

Is a neural network a chaotic system composed of many parts convergence is simply a type of attractor?

H = Kb * Ln W

High entropy means high temp means random weights / acts

SA reduces temp gradually as model fits data

In an entropy net the model fits data at higher temps SA is reversed until the system can be described as a

chaotic attractor. The attractor is dynamic and is the point at which the model converges to a state that has the highest entropy (disorder from order).

The more random it gets the more it knows about the data after being pretrained using SA reducing temp.

How train??

The entropy model has its activation functions, weights and thresholds initially mapped to a chaotic function before

training. Like baselining towards a set of implicit attractors.

The model then begins in a highly ordered state from training using cross ent / kl divergence / contrastive divergence / or sgd lowering temp of system

Then it trains with increasing temperature resulting in a disordered state high in entropy using data which is specific to

each goal which is a unique attractor. This is possible as the parameters of the model are shaped for this purpose.

Similar to multiple seeds being used for different solutions to a problem - using chaos and convergence (attractor) to solve similar problems differently

What we think is more random more chaotic is actually our model fitting more data using a complex chaotic attractor

Isn't complexity just chaos to the unknowing?

Essentially using chaos and entropy to fit more information in our nn when we think of each NN having a set of unique solutions to any problem that can be defined as attractors with seeds. If we increase entropy and temperature we can create more space for more attractors. Chaotic attractors with random distributions across the models parameter space resulting in complex seed dependant solutions.

higher entropy = more problems + more seeds /solutions

problem + seed = solution (attractor)

Switch from low energy models to high energy ones using chaos. Currently we use stochastic parameters in our noisy activation functions this will steer the model towards the above.

problems have multiple solutions using different seeds

Army Risen

by Sam Shepherd

Science Fiction Technlogy Driven Tale of Murder and Suspense. For people who like car's and women in a high tech future. A Black Comedy. Dark future snippet.